Streamlining Collaboration: Can Permissioned Attestation Boost Your Developer Productivity Team?

At devactivity.com, we've been following a compelling discussion on GitHub about designing effective community governance models. The core question: how can we sustain multiple voices in collaborative projects without descending into chaos or overburdening contributors? This is a critical challenge for any developer productivity team aiming to streamline feedback and decision-making.

The Challenge: Managing Diverse Voices in Practice

In Discussion #185896, PoX-Praxis revisited several open concerns regarding a 'permissioned attestation' model. The goal is to allow diverse perspectives to contribute meaningfully without leading to fragmentation or undue burden on the original author. Key concerns included:

- Feasibility of permissioned attestation: The criteria for who can become an Attestor were unclear, raising concerns about implicit gatekeeping or control by a small group. Scalability with a large number of participants was also a question.

- Author / Initiator burden: The increased cognitive and operational load on the original author if many attestations are submitted, alongside the psychological burden of distinguishing between 'critical' and 'supplementary' proposals.

- Forking and fragmentation risk: Excessive branching could fragment discussion and context, reducing coherence and requiring mechanisms to suppress or re-integrate branches.

- Real-world failure modes: Learning from known dysfunctions in similar systems like Wikipedia editing dynamics or formal RFC processes.

- Minimal validation path: How a minimal prototype of this ecosystem would look and whether existing tools could be used to test it immediately.

A Proposed Solution: Permissioned Attestation by Third Parties

To address these issues, PoX-Praxis proposed a model where 'multiple voices' don't mean free-form editing, but rather the coexistence of multiple validations and contextual stances. This takes the form of an editable proposal mechanism applied to a posted context, allowing for:

- Adding missing assumptions

- Making implicit constraints explicit

- Clarifying interpretive mismatches or confusion

- Suggesting scope limitations or possible branches

To manage this, the original author receives attestations and may accept, revise, or reject them. Attestors are assumed to be qualified participants (e.g., domain-knowledge holders, prior contributors). To reduce author burden, attestations are categorized into 'Critical proposals' (response required) and 'Supplementary proposals' (may be ignored).

The model also defines clear role separation:

- Initiator: Raises a question and presents the initial context.

- Proposer: Presents possible actions (options) under that context.

- Attestor: Issues contextual stances by proposing additions or revisions to the context or options.

- Forker: Branches an option under a materially different context.

- Observer: Views and compares options and contexts (for calibration).

The hypothesis is that this allows for comprehensive examination across multiple viewpoints, simultaneously preventing context fixation, authority-formation, and contextual drift.

Feasibility Check: An Expert's View

DavitEgoian, a seasoned observer of open-source community dynamics, offered a thoughtful analysis, positioning the proposal between a formal RFC (Request for Comments) and a standard forum discussion. His insights are crucial for understanding the real-world implications for delivery and technical leadership.

What This Design Addresses Effectively

The strongest aspect, DavitEgoian notes, is the explicit separation of roles (Initiator, Proposer, Attestor). This directly addresses the 'Context Fixation' issue well. By formally defining a role for someone who challenges assumptions (the Attestor), the design gives permission for critique without it feeling like a personal attack. This fosters a healthier feedback culture, vital for any high-performing engineering team.

It also tackles the 'Chaos' inherent in free-form discussions. By keeping the Author as the central node who must accept or reject attestations, the model maintains a coherent narrative rather than a sprawling, unreadable comment thread. This structured approach can significantly improve the efficiency of decision-making processes.

Lingering Concerns and Potential Pitfalls

Despite the categorization of proposals, the 'Author Burden' remains a significant unresolved issue. The distinction between 'Critical' and 'Supplementary' proposals is inherently subjective. An Attestor will almost always believe their point is critical, and if the Author disagrees and ignores it, this immediately triggers the 'Gatekeeping' concern the model aimed to avoid.

Furthermore, the 'Qualification' problem for Attestors introduces a dilemma. If you vet Attestors, you add a massive administrative layer, which can slow down the entire process. If you don't vet them, the system becomes vulnerable to spam or low-quality contributions. For organizations focused on optimizing data engineer KPIs or other critical metrics, these bottlenecks represent real risks to project velocity and team morale, potentially hindering rather than helping productivity.

Where the Model Might First Break Down

In practice, DavitEgoian predicts the model will most likely fail at the 'Initiator Bottleneck'. For this system to function, the Initiator (Author) must be infinitely patient, unbiased, and consistently active. If the Initiator becomes defensive when an Attestor points out a flaw, or simply gets busy and stops responding, the entire collaborative context freezes. This halt in progress can be detrimental to project timelines and team morale.

While the 'Forker' role acts as a safety valve, allowing for alternative paths, frequent forking often signals the breakdown of the original collaboration, not its success. It can lead to fragmentation, which was one of the initial concerns.

A Practical Path Forward: Testing with Existing Tools

The good news is that this model isn't purely theoretical. DavitEgoian suggests a clever way to simulate it immediately using familiar GitHub functionality: a repository with Pull Requests. Here's how:

- The Initiator posts the initial context as a Markdown file in a repository.

- Attestors submit Pull Requests (PRs) to propose changes, additions, or clarifications to the Markdown file.

- The Initiator (repository owner/maintainer) reviews these PRs, merging (Accepting) or closing (Rejecting) them.

- The comment thread on each PR serves as the negotiation space for discussion and refinement.

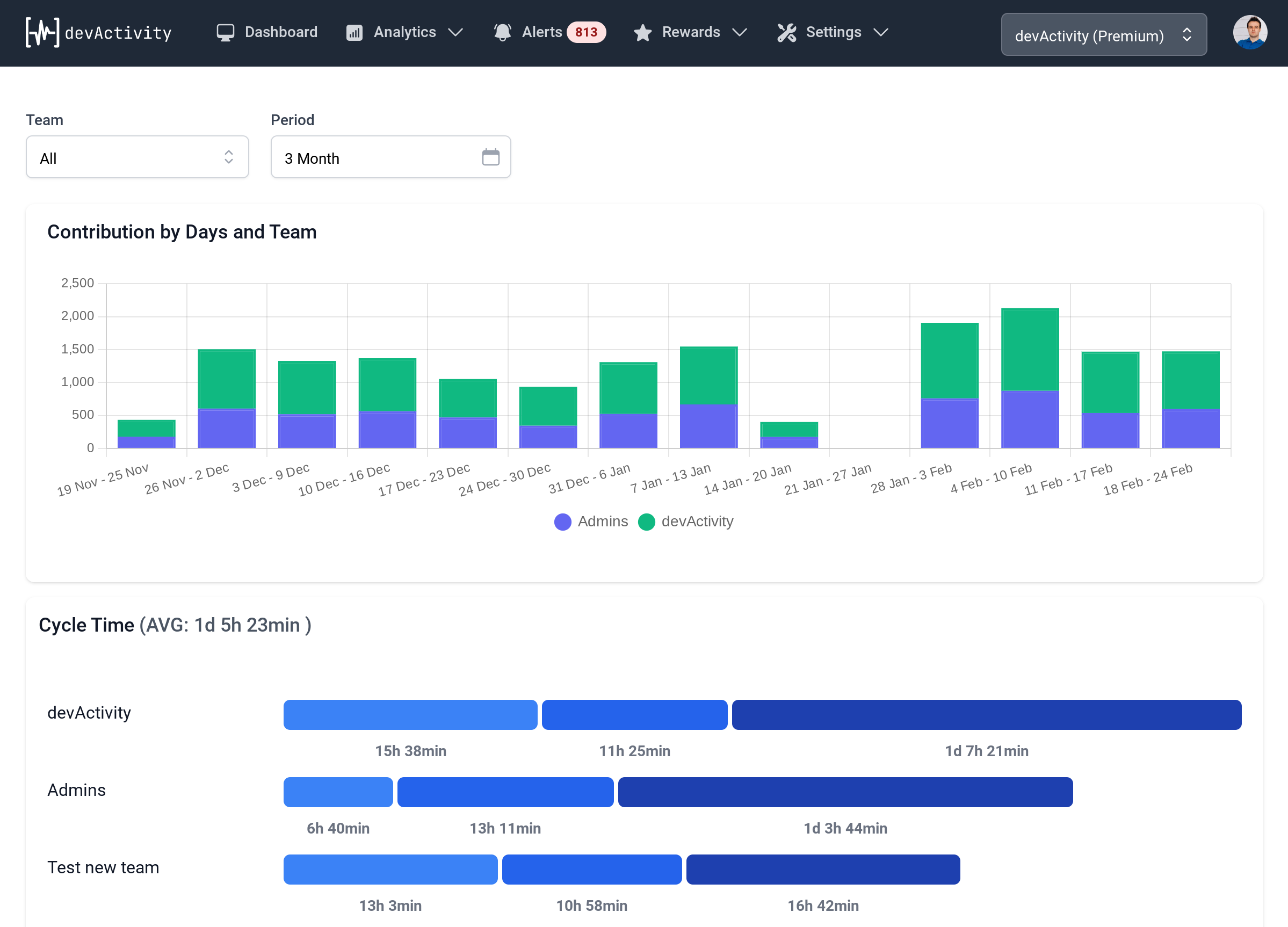

This approach offers a tangible, low-friction way for any developer productivity team to experiment with structured feedback loops. It is permissioned (only the owner merges), sustains multiple voices (anyone can open a PR), and keeps the history clean. This method could even serve as a valuable Pluralsight Flow free alternative for tracking contribution and engagement patterns in a more granular, context-specific way within your own governance experiments.

Conclusion

The discussion around permissioned attestation highlights a fundamental truth in modern software development: effective collaboration isn't just about tools; it's about well-defined processes and roles. For technical leaders, product managers, and delivery managers, understanding these dynamics is crucial for fostering environments where multiple voices can genuinely contribute without overwhelming the system.

By experimenting with models like the GitHub PR simulation, teams can proactively address potential bottlenecks and build more resilient, inclusive, and ultimately, more productive development workflows. The journey to perfect community governance is ongoing, but structured approaches like permissioned attestation offer a promising direction for enhancing collaboration and driving innovation.