GitHub Actions Outage: Unpacking the Real Impact on Software KPI Metrics

GitHub Actions Outage Highlights Criticality of Software KPI Metrics

CI/CD pipelines are the backbone of efficient software development workflows. When these critical services falter, developer productivity and project timelines suffer significantly. A recent GitHub Actions hosted runner incident, documented in a community discussion, starkly reminded us of the importance of resilient infrastructure and transparent incident management, directly impacting key software KPI metrics.

Incident Overview: A Disruption to Hosted Runners

On February 2, 2026, GitHub declared an incident affecting GitHub Actions hosted runners. Initially, users reported high wait times and job failures across all labels, while self-hosted runners remained unaffected. The root cause was later identified as a backend storage access policy change by GitHub's underlying compute provider. This change inadvertently blocked access to critical VM metadata, leading to failures in VM operations (create, delete, reimage) and consequently, rendering hosted runners unavailable.

The impact wasn't limited to Actions; other GitHub features like Copilot Coding Agent, Dependabot, and Pages, also experienced degradation. This widespread effect underscores the interconnectedness of modern development tools and the cascading potential of a single point of failure.

Mitigation, Resolution, and the User Experience Gap

GitHub's upstream provider applied a mitigation by rolling back the problematic policy change. Recovery was phased, with standard runners seeing full recovery by 23:10 UTC on February 2nd, and larger runners following by 00:30 UTC on February 3rd.

However, the story didn't end with the official "Incident Resolved" declaration. A crucial insight emerged from the community discussion: the gap between official status and user reality. Hours after the incident was marked resolved, a user, mariush444, reported that their Actions runs remained queued and a Pages build was blocked. This wasn't an isolated observation; it pointed to a common challenge in incident management: the lingering effects of a major outage can persist long after the core issue is "fixed."

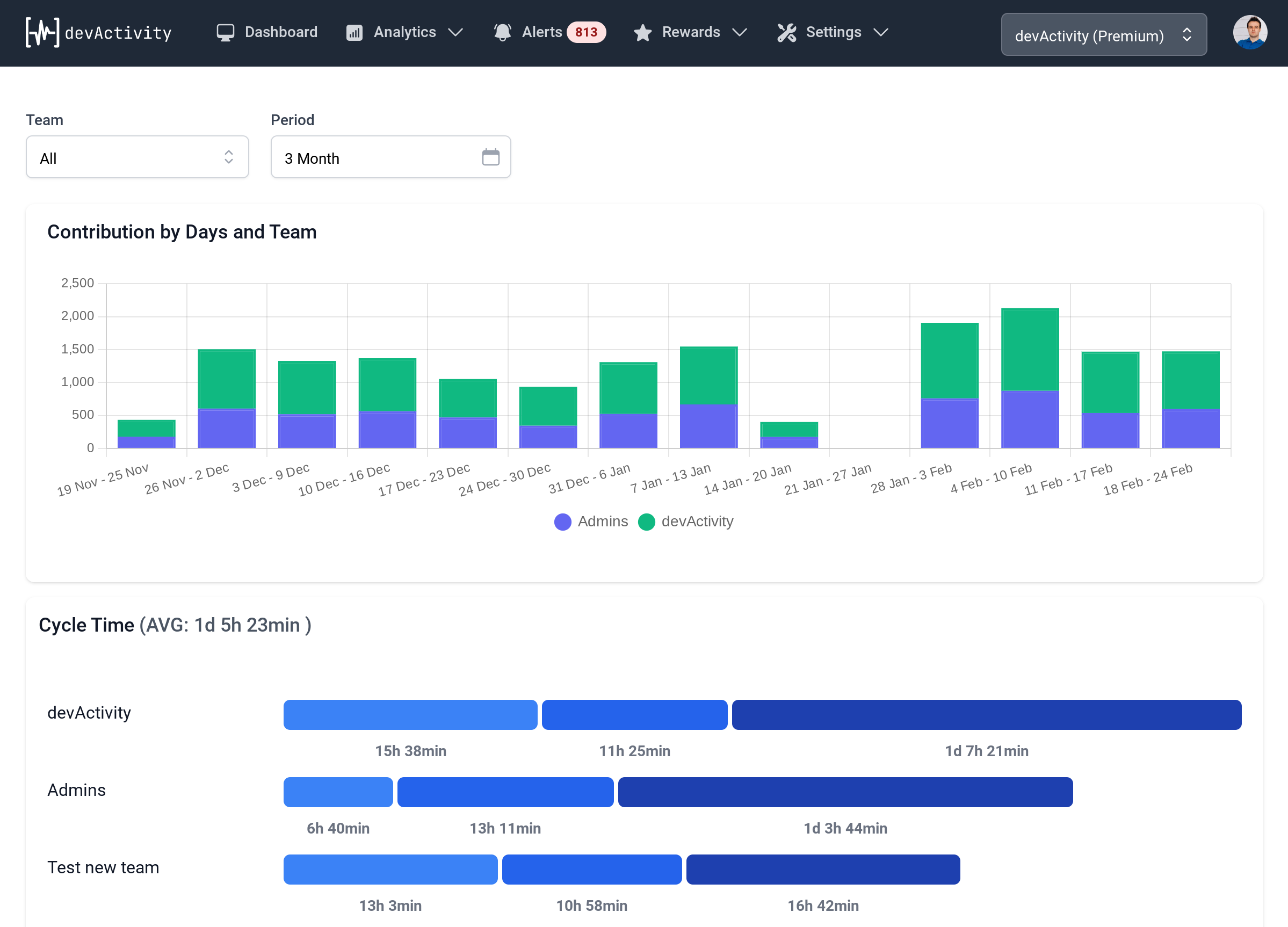

This delay in full operational recovery directly impacts critical metrics. For dev teams, stuck builds mean stalled deployments and wasted time. For product managers, it translates to missed release windows. A robust developer dashboard becomes indispensable here, offering real-time visibility into queue lengths and build statuses, allowing proactive reaction.

The True Cost: Impact on Software KPI Metrics and Team Productivity

An incident of this magnitude has a profound ripple effect on an organization's software KPI metrics. Consider the following:

- Deployment Frequency: When CI/CD pipelines are down, deployments halt. Even a few hours of disruption can significantly reduce the number of releases within a sprint or month.

- Lead Time for Changes: The time from code commit to production deployment skyrockets. Developers finish features, but they can't be delivered, leading to increasing work-in-progress (WIP) and delayed value delivery.

- Mean Time To Recovery (MTTR): While GitHub's official MTTR might be calculated from the start of the incident to its resolution, the actual MTTR for individual teams, especially those experiencing lingering issues like mariush444, can be much longer. This discrepancy highlights the need for organizations to track their own internal MTTR, reflecting the full impact on their specific workflows.

- Developer Experience and Morale: Nothing saps productivity and morale faster than waiting for tools. Developers become blocked, unable to test, merge, or deploy. This frustration can even subtly influence a software developer performance review sample if external tool failures aren't accounted for. Leaders must ensure teams aren't unfairly penalized.

- Cost of Downtime: Beyond productivity, there are direct financial costs associated with delayed features, missed market opportunities, and potential reputational damage.

Lessons for Technical Leadership and Delivery Managers

This GitHub Actions incident offers valuable takeaways for CTOs, delivery managers, and technical leaders:

- Diversify and Decentralize Where Possible: The unaffected self-hosted runners highlight a critical point. While replicating a cloud provider's infrastructure isn't feasible for most, strategic use of self-hosted runners for critical workflows, or multi-cloud CI/CD strategies, can mitigate single-point-of-failure risks.

- Proactive Observability is Key: Relying solely on a vendor's status page is insufficient. Implement robust internal monitoring for your CI/CD queue lengths, build success rates, and deployment times. An effective developer dashboard should provide real-time insights into your specific pipeline health.

- Transparent Communication, Internally and Externally: GitHub's updates were frequent, but the "resolved" status didn't align with all user experiences. Leaders must communicate clearly about ongoing impacts internally and acknowledge lingering issues transparently externally.

- Strengthen Vendor Management and SLAs: The incident's root cause was an upstream provider's policy change, underscoring the importance of clear Service Level Agreements (SLAs) with critical vendors and strong partnerships for incident response. GitHub's commitment to work with their compute provider is a good example.

- Prioritize Developer Experience (DX): Tool reliability is paramount to DX. Invest in resilient tooling and processes that minimize friction and maximize flow. During incidents, prioritize clear communication and support for developers.

Moving Forward: Building Resilience into Your Delivery Pipeline

The GitHub Actions incident serves as a powerful reminder that even the most robust platforms can experience outages. For organizations striving for high velocity and reliability, this isn't just a news story; it's a call to action. By meticulously tracking software KPI metrics, investing in comprehensive observability, and fostering a culture of resilience and transparent communication, technical leaders can better prepare for, respond to, and recover from inevitable disruptions. The goal isn't to prevent every outage, but to build systems and processes that minimize their impact and ensure your teams can continue to deliver value, even when the unexpected happens.