Optimizing Generative AI Projects: A Guide to Latency, Scalability, and Engineering Performance

The landscape of Generative AI is evolving at breakneck speed, with innovative projects like AI chatbots, resume generators, and sophisticated multi-agent systems moving rapidly from concept to production. But as any seasoned developer knows, transitioning from a proof-of-concept to a robust, scalable, and performant application introduces a unique set of challenges. Recently, P-r-e-m-i-u-m from the GitHub Community sparked a vital discussion, seeking guidance on best practices for optimizing GenAI workflows, tackling inference latency, and ensuring project scalability. The community's response was a goldmine of practical advice, offering a clear roadmap for any team looking to elevate their AI initiatives.

This discussion isn't just about technical tweaks; it's about fostering a culture of efficiency and foresight in AI development. For dev teams, product managers, and CTOs alike, understanding these strategies is crucial for a positive engineering performance review of your AI investments.

Conquering LLM Inference Latency: Speed is a Feature

In the world of AI, perceived speed often trumps raw processing power. Users expect instant responses, and even minor delays can degrade the experience. The community emphasized that latency isn't always about the model itself, but how we interact with it.

Stream Responses for Perceived Speed

- The Psychology of Streaming: As one contributor aptly put it, "Users perceive streamed output as way faster even when total time is similar." Implement streaming responses wherever possible to deliver an immediate, engaging user experience.

Cache Aggressively and Smartly

- Fewer Calls, Better Performance: "Fewer calls beats a smaller model almost every time," highlights a core truth. Implement robust caching for repeated prompts, common contexts, and frequently used API responses. Prompt caching, especially for system prompts and shared context chunks, can significantly cut down both latency and cost.

Choose the Right Model for the Job

- Don't Overkill: Resist the urge to throw the largest LLM (e.g., GPT-4) at every problem. For many chatbot interactions or template-filling tasks (like a resume generator), smaller, more specialized models such as GPT-3.5 or Claude Haiku can deliver comparable quality with vastly reduced latency and operational costs. Save the heavy-duty models for when their unique reasoning power is truly indispensable.

Optimize Model Execution and Batching

- Technical Tweaks: For those deep in the weeds, techniques like model quantization and optimizing batch sizes can yield significant performance gains. Tools such as ONNX Runtime or TensorRT were specifically recommended for these optimizations.

- Parallelize for Multi-Agent Systems: In complex multi-agent setups, ensure independent agent tasks run in parallel using asynchronous programming (

async/await). This simple architectural choice can dramatically cut down overall execution time.

Efficient API and Vector Database Integration: The Backbone of RAG

Retrieval-Augmented Generation (RAG) is a cornerstone of many modern GenAI applications. How you integrate your APIs and vector databases directly impacts both performance and maintainability.

Treat Retrieval as a First-Class System

- Dedicated Focus: Don't treat your retrieval mechanism as an afterthought. Design it as a core system, ensuring efficient filtering and stable embeddings. Avoid mixing heavy write operations with hot reads in the same index, which can quietly degrade performance.

Separate Concerns and Standardize

- Clean Code, Easier Debugging: "Keep your database queries separate from your LLM calls. I mean really separate." Inlining database calls within LLM response handlers creates a debugging and optimization nightmare. Abstract your client code for APIs and vector databases, making it modular and reusable.

Connection Pooling and Batch Operations

- Resource Management: For vector databases like Pinecone, Weaviate, or Qdrant, use connection pooling to avoid the overhead of opening new connections for every query. Similarly, batch your embedding operations instead of processing inputs one at a time to maximize throughput.

Pre-computation is Your Friend

- Embed Once, Reuse Often: For static or semi-static content, pre-compute and store embeddings. For a resume generator, common sections or phrases can be embedded once and reused, eliminating redundant computation.

Managed Solutions vs. Self-Hosting

- Infrastructure Overhead: Especially when starting, consider managed vector database solutions (e.g., Pinecone, Weaviate Cloud). They significantly reduce infrastructure overhead, allowing your team to focus on core application logic.

- Chunking Strategies: Regardless of your chosen vector database, invest time in proper chunking strategies. How you break down and embed your data often matters more for retrieval quality than the specific database technology.

Architecting for Scalability and Maintainability: Future-Proofing Your GenAI Projects

A well-structured project isn't just easier to maintain; it's inherently more scalable. The community offered robust advice on setting up your GenAI applications for long-term success.

The Orchestration Layer: Your LLM Command Center

- Centralized Control: If every part of your application directly interacts with the LLM, complexity spirals. Implement a thin orchestration layer that centralizes prompt management, retry logic, and fallback mechanisms. This keeps the rest of your codebase clean and simplifies scaling.

Modular Design and Clean Architecture

- Clear Interfaces: Follow clean architecture principles, keeping components modular. For multi-agent systems, ensure each agent is a distinct module with well-defined interfaces. This makes swapping models, adding new features, or debugging specific components significantly easier.

Prompt Management and Versioning

- Prompts as Code: "Separate your prompts from your code. Put them in config files or a dedicated prompts module." This practice is invaluable for iterating on prompt design without digging through application logic.

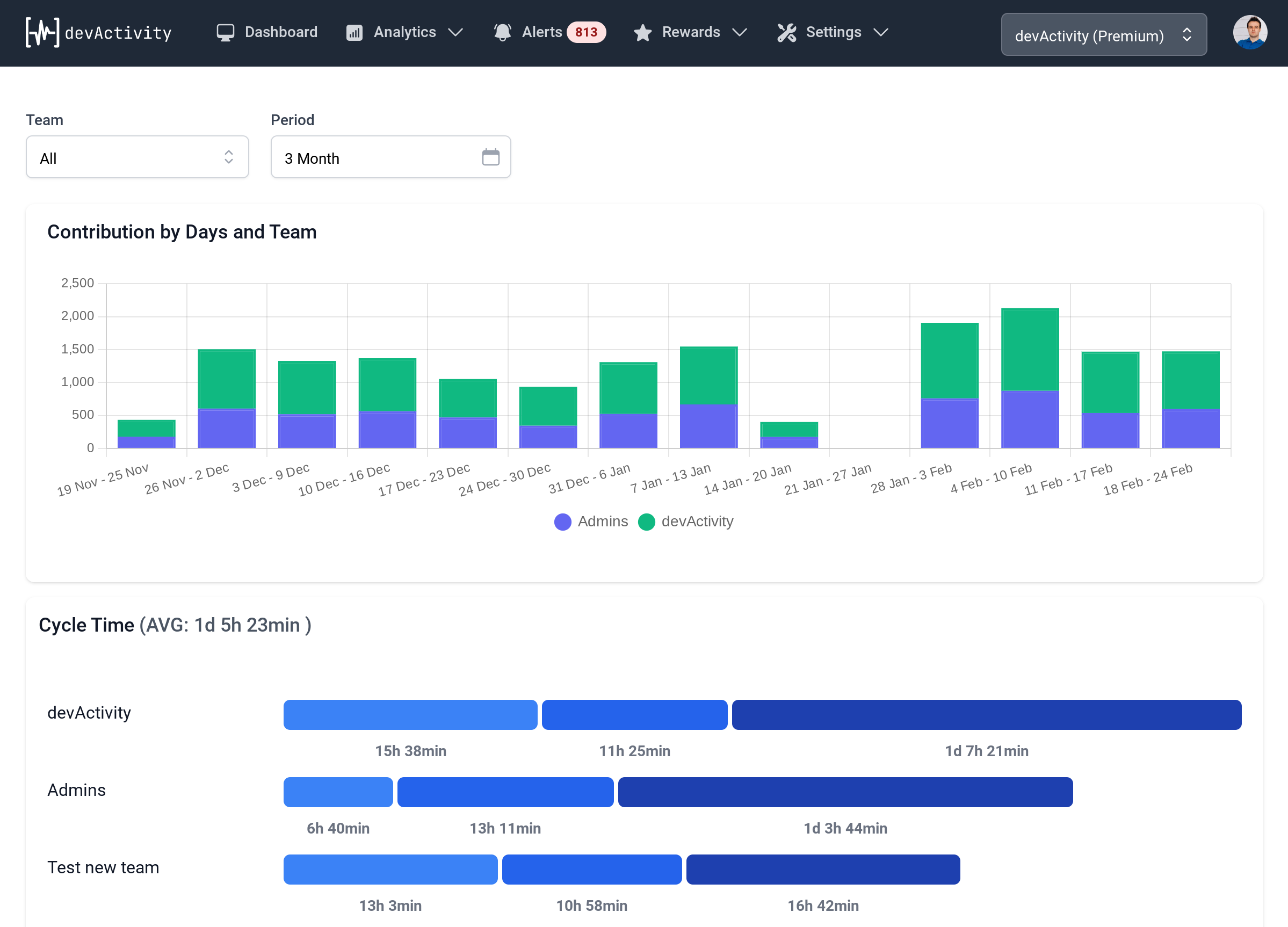

- Version Control is Key: Treat your prompts like critical code. Version them alongside your application code using Git. This ensures that when a prompt change inadvertently breaks functionality, you can easily track what changed and revert if necessary. Leveraging git repo analytics can even help track the evolution of prompt effectiveness over time, providing valuable insights for your engineering performance review.

Abstract Your LLM Calls

- Service Layer Abstraction: Encapsulate all LLM interactions behind a dedicated service layer. This makes it trivial to swap out models, integrate new providers, or add application-wide features like logging, rate limiting, or caching without touching core business logic.

Containerization and CI/CD

- Consistent Deployment: Use containerization (Docker) with CI/CD pipelines for consistent, reliable deployment across environments. This is fundamental for scaling and ensuring that your development, staging, and production environments behave predictably.

Tools, Habits, and the Unsexy Truth of Optimization

Beyond architectural patterns, the community highlighted essential tools and disciplined habits that differentiate successful GenAI projects.

Metrics and Observability: See What's Happening

- Track Everything: "Simple metrics go a long way." Track call counts, latency, and cache hits. Tools like LangSmith or Weights & Biases are invaluable for logging LLM calls, responses, and performance metrics. When issues arise, you need to see exactly what prompt went out and what came back. This data is critical for any meaningful engineering performance review.

Build Evaluation Sets Early

- Prevent Regressions: Create a small, representative evaluation set (10-20 examples with expected outputs) early in the project lifecycle. Run these tests regularly to catch regressions when iterating on models or prompts.

Don't Over-Engineer Prematurely

- Iterate and Optimize: "Don't over-engineer early." Get something working, identify where the actual bottlenecks and pain points are, then address those systematically. Premature optimization can lead to complex, unused abstractions.

Valuable Resources

- Practical Guides: The Anthropic Cookbook and OpenAI's guides offer practical implementation patterns beyond basic examples.

- Community Wisdom: Simon Willison's blog is consistently praised for its practical, real-world LLM application insights.

- Vector DB Docs: The documentation for specific vector databases (Pinecone, Weaviate) often contains excellent guides on chunking and indexing strategies.

- Specialized Tools: For specific tasks like text-to-SQL, resources like ai2sql.io can be useful for prototyping.

The "unsexy truth," as one contributor put it, is that most significant improvements come from "profiling, measuring, and fixing bottlenecks methodically." Building instrumentation early allows you to see where time and money are truly going, making your optimization efforts data-driven and effective. A robust git software tool setup, coupled with good analytics, can provide the historical context needed to understand performance changes over time.

Conclusion: Building Better AI, Together

The GitHub discussion initiated by P-r-e-m-i-u-m underscores a collective commitment to advancing GenAI development. From optimizing LLM inference latency and streamlining API/vector database integrations to architecting for scalability and adopting disciplined workflows, the community's insights provide a powerful blueprint. By embracing these best practices, engineering teams can build more robust, performant, and maintainable Generative AI applications, ensuring a strong return on their innovative efforts.

What are your go-to strategies for optimizing GenAI projects? Share your insights in the comments below!