5 Ways AI-Powered Development Integrations are Revolutionizing Software Delivery in 2026

The AI Revolution in Software Delivery is Here

Let's be honest: software development can be a messy business. Between managing sprawling codebases, coordinating complex deployments, and keeping up with ever-evolving technologies, it's a wonder anything gets shipped at all. But what if AI could step in and smooth out the wrinkles, turning chaos into a well-oiled machine? In 2026, that's precisely what's happening. AI-powered development integrations are no longer a futuristic fantasy; they're a present-day reality, transforming how software is conceived, built, and deployed. These integrations are not just about automating simple tasks; they are about augmenting human capabilities, providing developers with the insights and assistance they need to work smarter, not harder. As organizations strive for greater efficiency and faster time-to-market, understanding and implementing these AI-driven approaches is becoming crucial. Let’s dive into five key ways these integrations are revolutionizing the software delivery landscape.

1. Intelligent Observability for Faster Troubleshooting

Imagine a world where troubleshooting complex distributed systems doesn't involve endless hours of digging through logs and sifting through metrics. That's the promise of AI-powered observability. Modern cloud applications, often built as collections of microservices running on platforms like Amazon EKS, Amazon ECS, or AWS Lambda, present significant observability challenges. According to AWS, troubleshooting in such environments can be incredibly time-consuming, requiring deep understanding of the service and manual correlation of information from different sources. The skill gap is substantial, directly impacting Mean Time to Recovery (MTTR). Conversational observability, powered by generative AI, offers a solution. By providing engineers with a self-service way to diagnose and resolve cluster issues, it dramatically cuts down MTTR and reduces the burden on experts. Think of it as having an AI-powered assistant that can instantly analyze vast amounts of telemetry data, identify root causes, and suggest solutions – all through a simple, conversational interface. This is particularly valuable in Kubernetes environments, where navigating multiple layers of abstraction (pods, nodes, networking, logs, events) can be daunting. AWS highlights the benefits of this approach, emphasizing its potential to significantly improve engineering efficiency.

Impact: Expect to see a 30-50% reduction in MTTR and a significant decrease in the time spent on manual troubleshooting.

2. Streamlined AI Gateway Management

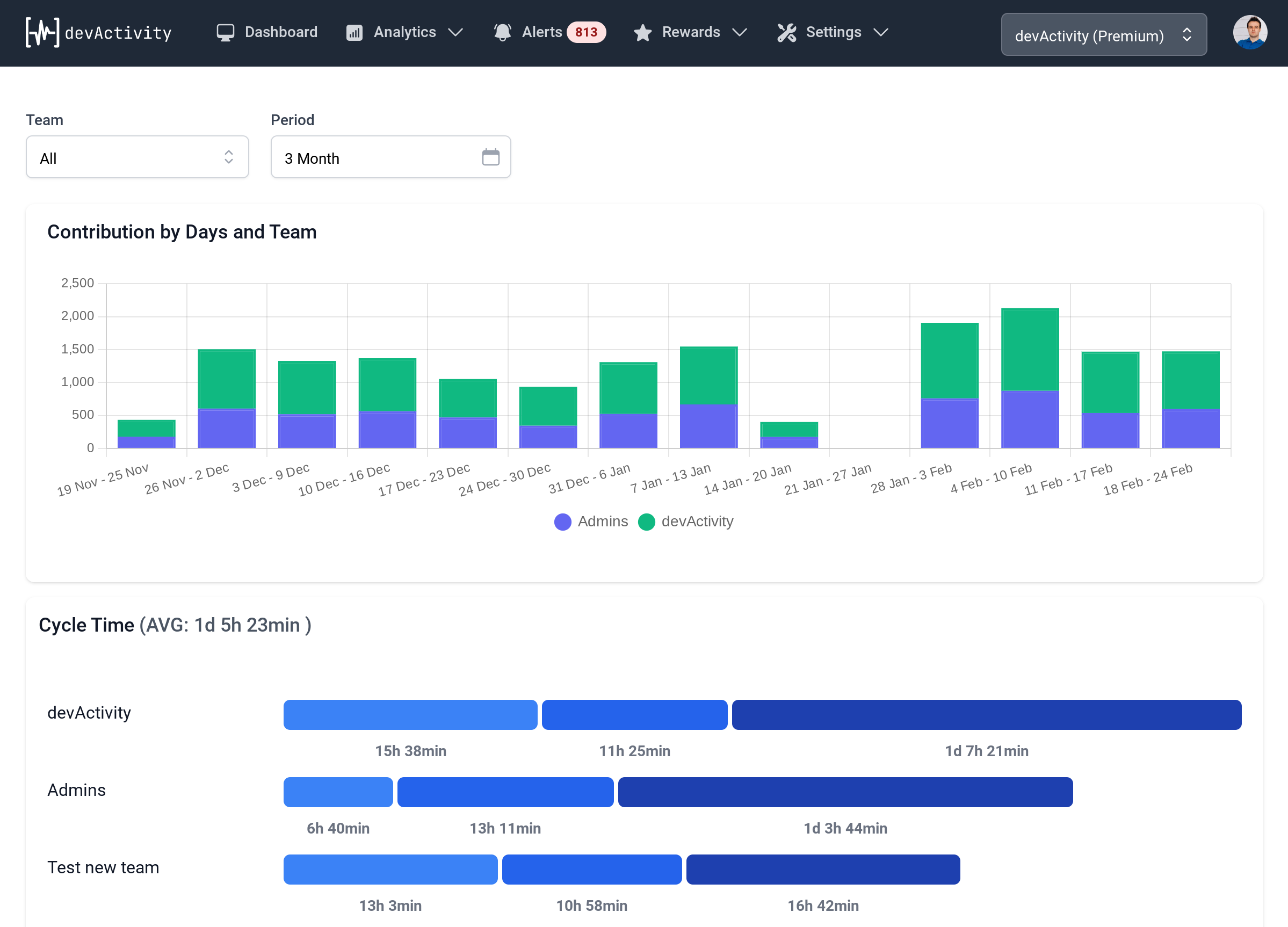

As organizations increasingly adopt generative AI models like those offered by Amazon Bedrock, governing their usage becomes paramount. This includes managing authorization, quota enforcement, tenant isolation, and cost control. An AI gateway, built using services like Amazon API Gateway, provides a robust solution for controlling access to these powerful AI models at scale. Such gateways offer key capabilities, including request authorization with seamless integration into existing identity systems (e.g., JWT validation), usage quotas and request throttling, lifecycle management, canary releases, and AWS WAF integration. Furthermore, the use of Amazon API Gateway response streaming enables real-time delivery of API model outputs, enhancing the user experience. Dynatrace, for example, has developed a reference architecture for an AI gateway that demonstrates how to achieve granular control over LLM access using fully managed AWS services. This type of integration is crucial for ensuring responsible and efficient use of AI within the enterprise. It also helps to optimize your developer productivity dashboard, so you can track the impact of your AI investments.

Impact: Enhanced security, improved cost management, and simplified governance of AI model usage.

3. Automated Code Migration and Upgrades

Migrating code from older SDK versions to newer ones can be a tedious and error-prone process. However, with the advent of AI-powered migration tools, this task is becoming significantly easier. For instance, the Migration Tool for the AWS SDK for Java 2.x leverages OpenRewrite, an open-source automated code refactoring tool, to upgrade supported 1.x code to 2.x code. This tool automates much of the transition process, transforming code for all service SDK clients as well as the Amazon Simple Storage Service (S3) TransferManager high-level library. While some manual migration may still be required for unsupported transforms, the tool significantly reduces the effort involved. AWS has made this tool generally available, highlighting its convenience in streamlining the migration process. Similarly, Amazon Q Developer can be used to upgrade the AWS SDK for Go from V1 to V2, accelerating the migration process and reducing technical debt. As explored in our recent post, Is the Cult of Constant 'Trying Things Out' Killing Your Engineering Efficiency?, avoiding unnecessary churn and focusing on strategic upgrades is key to maintaining high levels of software engineering kpis.

Impact: Reduced migration time, fewer errors, and faster adoption of new SDK features and performance enhancements.

4. Serverless Caching Solutions

Consistent caching is a critical requirement in distributed architectures, where maintaining data integrity and performance across multiple application instances can be challenging. The AWS .NET Distributed Cache Provider for Amazon DynamoDB addresses this challenge by providing a seamless, serverless caching solution. This provider implements the ASP.NET Core IDistributedCache interface, allowing .NET developers to integrate the fully managed and durable infrastructure of DynamoDB into their caching layer with minimal code changes. A distributed cache can significantly improve the performance and scalability of an ASP.NET Core app, especially when hosted by a cloud service or a server farm. AWS highlights the ease of integration and the benefits of leveraging DynamoDB's robust infrastructure for caching needs. The ability to quickly and easily implement a distributed cache can dramatically improve application responsiveness and reduce the load on backend systems. Integrating this with your developer dashboard provides insights into cache performance and effectiveness.

Impact: Improved application performance, enhanced scalability, and reduced infrastructure management overhead.

5. AI-Assisted Code Generation and Completion

While not directly an integration in the traditional sense, AI-assisted code generation and completion tools are rapidly becoming indispensable parts of the development workflow. Tools like GitHub Copilot and Amazon Q Developer use machine learning models to suggest code snippets, complete lines of code, and even generate entire functions based on natural language descriptions. This can significantly speed up the development process, reduce the likelihood of errors, and help developers learn new APIs and frameworks more quickly. By automating repetitive tasks and providing intelligent suggestions, these tools free up developers to focus on more complex and creative aspects of their work. As we noted in Cut MTTR by 50%: How AI-Powered Root Cause Analysis is Revolutionizing Incident Response, leveraging AI to augment human capabilities is a key trend in modern software development.

Impact: Faster development cycles, reduced error rates, and increased developer satisfaction.

Conclusion

AI-powered development integrations are transforming the software delivery landscape in profound ways. From intelligent observability and streamlined AI gateway management to automated code migration and serverless caching solutions, these integrations offer significant benefits in terms of efficiency, cost savings, and developer productivity. As we move further into 2026, organizations that embrace these AI-driven approaches will be best positioned to deliver high-quality software faster and more reliably than ever before. The future of software development is intelligent, and the time to integrate is now.